Microsoft "Best Positioned" To Benefit From AI Product Cycles, Goldman Says

Microsoft shares are down 16% from the mid-December peak and remain unable to reclaim the $500 level. The key question for traders is whether the pullback presents a buy-the-dip opportunity, as several positive AI tailwinds have emerged in recent weeks, most notably from the world's leading memory suppliers, which reinforce the AI infrastructure cycle and downstream demand visibility for hyperscalers like Microsoft.

For clarity, Goldman Sachs analysts led by Gabriela Borges recently met with senior executives at Microsoft's headquarters in Redmond, Washington.

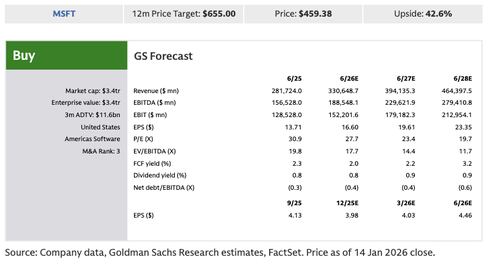

What Borges and her colleagues understood after meetings with executives is that they remain bullish on the company and believe it's the best positioned in their coverage universe to compound AI product cycles, with a solid path to $35 in EPS by FY30, implying over 20% EPS growth.

Key takeaways from Borges' discussions with Microsoft executives:

-

MSFT's intense focus on fungibility to manage through the AI compute cycle in both periods of supply constraints and potentially excess supply, and to mitigate customer concentration risk;

-

MSFT's intense focus on capacity planning that allows it to dynamically allocate compute across Azure customers, internal R&D and 1P apps;

-

MSFT's gross margin advantage via its partnership with OpenAI (such that it can leverage models without paying additional LLM API fees) and its potential to abstract LLM value into an orchestration layer; and

-

An evolution in enterprise conversations that are more supportive of Copilot adoption today vs. a year ago.

Here are the more in-depth takeaways from the conversations:

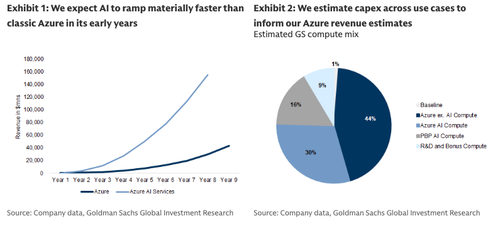

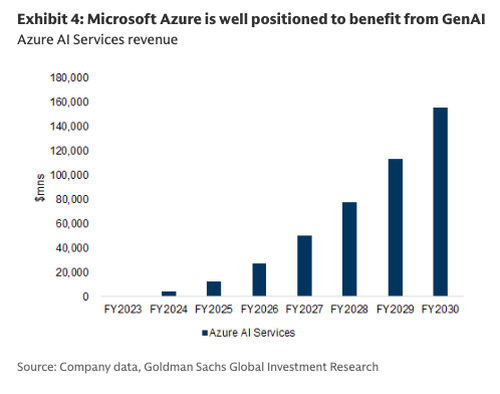

1. Microsoft expects AI margins to improve over time and sees margin expansion potential in core cloud: Management frames Azure gross margins through two lenses: 1) classic cloud, and 2) with AI. For Azure ex. AI, Microsoft believes that margins can continue to expand with scale, efficiency gains, and cost optimization, even with passing price downs partly on to customers and server refresh cycles. For Azure with AI, Microsoft sees parallels between the AI cycle and the early stages of the cloud cycle, characterized initially by elevated costs and weak unit economics but improving with scale, utilization, and engineering efficiency. In the cloud transition, Microsoft set a medium-term gross margin target with annual targets and was within 100bps of their target each year. Relative to the cloud cycle, Microsoft believes it has more of a leadership position in the AI cycle such that it can define the art of the possible. This includes leveraging deep engineering and operational knowledge of over the years of managing silicon and software layers to deliver such operating discipline, and a team that meets very frequently to with a maniacal focus on capacity decisions and cost levers. Microsoft emphasized that the current AI cycle is characterized by far greater operational urgency and integration across business divisions (Cloud, Office, etc.). As an example, Microsoft identified an inefficient element in a model that was more compute-intensive than initially expected and, over the course of a single weekend, delivered an optimization solution that would have otherwise taken 2-3 months in the prior cloud era.

2. The role of LLMs: Microsoft drew parallels between the abstraction of the technology stack in the cloud vs. AI cycle. VMs abstracted hardware and containers abstracted much of the OS, simplifying deployment and improving long-term unit economics. Similarly, Microsoft sees LLMs as the next abstraction layer, abstracting application logic itself. Rather than hard-coding rules and workflows, applications are moving toward intent-driven execution, with models handling the reasoning and orchestration. Today, Microsoft believes focus is overly weighted on the absolute higher headline cost of new models (e.g., ChatGPT 5.2 vs. 5.1). However, next generation models are designed in a much more efficient manner with improving token efficiency. Today, the performance benefit of new models is passed to end users in almost every instance due to competition. In the future, models may balance between passing cost downs to the end user and delivering margins to shareholders. We believe the key swing factor will be the degree to which LLMs are differentiated vs. commoditized.

- Foundry has an opportunity to abstract LLMs: Foundry has an opportunity to become the abstraction layer for LLMs by sitting between applications and individual models, acting as the arbitrator or control plane for routing, governance, and cost optimization. While AI workloads are compute-intensive today, Microsoft believes that declining token costs, efficiency gains, and model commonality over time will allow a greater share of value to accrue to the platform layers. Eventually, LLM-related COGS and gross margin tax may become de minimus. Currently, Microsoft having IP rights to OpenAI models has allowed the company to incur no gross margin tax from API calls, which we view as a competitive advantage vs. other software providers.

3. How Microsoft thinks through Azure build out: Microsoft frames Azure build out through a supply-demand lens, with different monetization profiles across workloads. When forecasting, Microsoft buckets commitments by customer size and compute type, noting that GPU-driven AI workloads tend to monetize immediately once capacity comes online, creating step-function growth tied directly to deployment timing. In contrast, traditional CPU and non-AI workloads historically exhibited smoother consumption curves, as supply closely matched demand, and consumption curve was a function of customers progressing from POCs to production over time. For CPU and storage that is now explicitly tied to AI use cases, even these categories are beginning to show lumpier, step-like consumption trends. Even in a supply constrained environment, where Microsoft has some pricing leverage, there are still competitive dynamics at play for the largest customers.

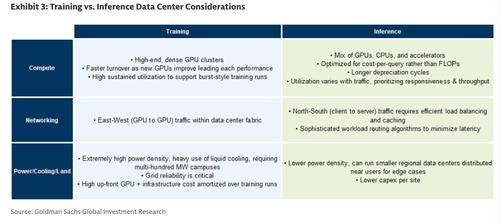

- Mix of training vs. inference: The mix of training vs. inference is an important determinant of overall consumption patterns. Training workloads tend to be episodic and intensive while inference is more persistent and scales over time. Management noted that there is a large part of the training market that Microsoft does not compete in. Instead, the company is making strategic bets on both training and downstream value creation through inference. As customer use cases and hardware capabilities evolve, workloads will also evolve from their initial use cases. Thus, fungibility is crucial in reducing utilization risk and supporting changing demand profiles. The company has already seen cases today where they are able to flip capacity from training to inferencing. Microsoft has visibility into whether customers are utilizing GPUs for training or inferencing based on infrastructure-level usage patterns. From initial deployment, the company works with customers not only to optimize GPU usage but also from a networking standpoint.

Considerations in a non-supply constraint environment: In an environment where supply is not constrained, Microsoft would approach Azure capacity planning as a portfolio-level decision rather than a standalone Azure exercise, aggregating demand signals across Azure, M365, D365, internal R&D and model training workloads. There needs to be high conviction across a set of parameters for the company to pursue capacity deployment. Factors that would change this level of confidence include: model roadmap, expected customer value, price-performance, performance on Microsoft's hardware, agentic workflow adoption, enterprise roadmap for adopting the technology. For the largest customers, this can create step function increases in capacity requirements across regions and SKUs, in which case Microsoft also evaluates regional demand, digital sovereignty requirements, and regulatory constraints.

Managing build-out and oversupply risk for the long term: Given that many contracts with frontier model developers are multi year in nature and carry demand uncertainty over extended time horizons, Microsoft mitigates risk by prioritizing fleet-level fungibility. This approach allows the same infrastructure to support not only external customer workloads but also demand across Microsoft's ecosystem, including M365, Github, Dynamics, and Bing. This flexibility enables Azure to absorb customer demand variability while preserving utilization, and capital commitments are made selectively to workloads and contracts that align with Microsoft's long-term strategy and economic interests.

4. BYOC (bring-your-own chip) is not economically attractive for Microsoft: Management does not view BYOC as economically compelling or strategically advantageous for Microsoft. BYOC silos portions of the infrastructure stack and undermines the core efficiency levers that drive cloud margins: scale purchasing, full-stack integration and end-to-end optimization. Microsoft's margin advantage accrues through optimizing across the data center, power and cooling, networking, and silicon layers, rather than at any single component of the stack. Fragmenting the silicon component thus weakens system-level efficiencies and reduces performance and pricing power. Microsoft acknowledged that in some select cases for the top 2-3 frontier models, it could make sense to shift upfront capital investment and duration risk to the customer. However, for the vast majority of customers, Microsoft's procurement and balance sheet advantages allow it to source and offer essentially any required chip architecture (ex. TPUs) or capability without customers bearing upfront chip acquisition costs or complex SLAs. Consistent with this view, Microsoft's recently announced partnership with Anthropic did not involve a BYOC arrangement. Broadly on offloading the risks of managing silicon to the customer, Microsoft already manages this risk with contract duration and decades of experience managing silicon lifecycles. Further, silicon lifetimes have proven more durable than initially assumed; e.g., Microsoft is able to sell fully-depreciated H100s to new customers.

5. Fungibility underpins Microsoft's infrastructure strategy: Microsoft's infrastructure strategy is anchored to maintaining fungibility for as long as practically possible across demand planning, data center design, and "late binding" in the supply chain. This concept of fungibility includes deferring design and deployment decisions to the last feasible point in order to preserve flexibility as demand signals and technology evolve. This principle shaped the company's data center architecture, which has evolved further in the last year. For example, newer designs such as Fairwater employ two-story structures and 3D rack layouts to shorten cable and pipe runs, reducing latency and improving bandwidth between GPUs to maximize performance. More broadly, Microsoft standardizes network fleet typologies while accommodating both liquid and air cooling, engineering these modes to be operationally similar. Microsoft is willing to accept modest efficiency trade offs, such as foregoing incremental performance gains from bespoke cooling or chip-specific designs, in exchange for greater capital agility and fungibility across workloads and silicon.

6. MAI roadmap and OpenAI IP: Microsoft frames MAI as complementary to its strategic partnership with OpenAI. With access to IP generated by OpenAI through 2032 (as of October 2025 agreement), Microsoft's MAI investments will focus on building forked, fine-tuned, and specialized variants of OpenAI models using Microsoft's proprietary, domain-specific data, with deep integration across its application ecosystem (e.g., Copilot). Examples thus far include MAI-Voice-1 and MAI-Image-1, purpose-built speech and image models deployed within Copilot and Bing Image Creator. MAI is intended to selectively develop first-party models for targeted workloads and use cases that can drive user engagement, product differentiation, and monetization across Microsoft's portfolio. Investment decisions are anchored in clear business and product outcomes to avoid funding science experiments or research projects.

7. Enterprise AI adoption and the evolution of "DIY" vs. packaged: Microsoft observed that relative to last year, customer Copilot conversations have moved from a discussion around ROI and "if" there will be adoption, to a conversation that is more focused on "when" and to what degree there will be adoption. At the same time, Microsoft noted less uncertainty in its budget conversations, compared to last 4QCY where customers often ended the year with surplus budget to protect against unknown macro factors. Microsoft noted uncertainty is the hardest budget environment to sell into; when budget is good, Microsoft operates from a position of strength; when budget is weak, Microsoft tends to outperform peers because of productivity gains tied to its platform approach.

Enterprise AI Adoption: Microsoft indicated that enterprise AI adoption is already broad, with a growing number of customers using AI in some form and accelerating usage intensity within that base. For Copilot specifically, Microsoft sees customers expand deployments from a few hundred to several thousand licenses as familiarity with the product's capabilities increases. Adoption typically follows a land-and-expand motion, with customers piloting at smaller scale before broadening usage.

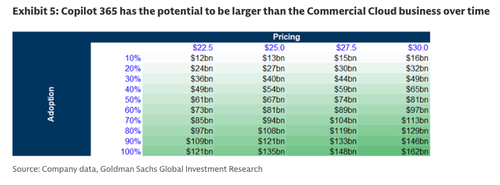

On Copilot pricing, Microsoft utilizes a value-based approach that examines customers' willingness to pay and the incremental value benefit relative to existing spend. Management assessed early adoption and usage patterns, initially optimizing for a higher price. To broaden top-of-funnel adoption, Microsoft introduced a lower-priced business SKU at $21 / user, with expectations that pricing SKUs will further diversify over time as use cases mature. Monetization is centered on feature expansion and consumption, with a long-term goal of delivering value that supports pricing above $30 / user. Productivity gains to date have varied by function and use case, with early benefits largely reflecting faster task completion rather than immediate cost savings. Internally, Microsoft is seeing the strongest traction in customer support functions, where improvements in time-to-resolution can translate more directly into budget savings.

- DIY software vs. building on Microsoft's platform: On building agents in house vs. on Microsoft's platform, Management looks to monetize both approaches and expect them to coexist, though it is too early to determine which share will ultimately be larger. While many customers initially build agents internally, Microsoft believes complexities will compound over time as customers must maintain models, manage updates, and establish reliable connectors. These operational hurdles underpin Microsoft's value proposition, positioning the company as a long-term partner to help customers manage, scale, and sustain AI-driven solutions. Our industry work suggests that the pendulum may began to swing back to packaged software as the ecosystem matures.

- Updates in GTM: Microsoft's sales incentives for Copilot have shifted from an initial focus on pricing, to driving deployment and realized value. When launched, customer conversations centered around price and ROI. Microsoft observations suggest that even modest daily usage delivers ROI to justify full license. As adoption broadened beyond early adopters, incentives are now aligned toward accelerating time-to-value. This includes reducing deployment friction with forward deployed engineers (we believe Microsoft's investment here has grown), simplifying complex customer environments, and building greater integration across tools.

- On Claude Cowork: we have received many questions this week on Anthropic's launch of Claude Cowork, a version of its Code tool with a user interface designed for non-coders. While Claude Cowork was not available at the time of our December meeting with Microsoft, we believe the question will be to what extent Microsoft can maintain a moat because of its entrenchment in existing knowledge worker workflows such that the quality of outputs from Copilot exceed or are differentiated from the quality of outputs from third party tools that may have more surface level integrations into the Microsoft ecosystem.

ZeroHedge Pro Subs can read the full note in the usual place.