Nvidia's Transition To 800 Volts: A Look Inside The Next AI CapEx Surge

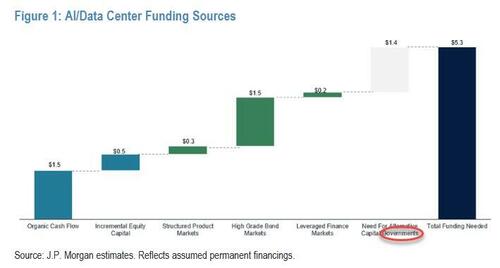

It took the market 3 years to do the math on the insatiable AI capex black hole, and specifically that it will require at least $5 trillion in funding over the next five years to prevent China from winning the AI war, a realization which came perilously close to bursting the AI bubble.

And just as the Street was getting used to the gargantuan AI funding hole that has to be filled, it is about to become even bigger.

In October, Nvidia announced more than a dozen partners as it prepares the data center industry for 800-volt DC power architectures and rack densities of 1MW, a revolutionary transition from the legacy 415-volt AC power architecture.

The GPU giant said in mid-October that it would unveil specs of the Vera Rubin NVL144 MGX-generation open architecture rack servers. The company is also set to detail ecosystem support for Nvidia’s Kyber system, which connects 576 Rubin Ultra GPUs, built to support increasing inference demands.

In response, some 20 industry partners are now showcasing new silicon, components, power systems, and support for the company’s latest 800-volt direct current (VDC) rack systems and power architectures that will support the Kyber rack architecture.

“Moving to 800VDC infrastructure from traditional 415 or 480 VAC three-phase systems offers increased scalability, improved energy efficiency, reduced materials usage, and higher capacity for performance in data centers,” Nvidia said. “Over 150 percent more power is transmitted through the same copper with 800VDC, eliminating the need for 200-kg copper busbars to feed a single rack.”

Of course, it also means a huge upgrade bill is coming.

According to DataCenter Dynamics, CoreWeave, Lambda, Nebius, Oracle Cloud Infrastructure, and Together AI are among the companies designing for 800-volt data centers. Nvidia noted that Foxconn’s 40MW Kaohsiung-1 data center in Taiwan is also using 800VDC. Additionally, Vertiv unveiled its 800VDC MGX reference architecture, combining power and cooling infrastructure architecture. HPE is announcing product support for Kyber.

Nvidia added that the new Vera Rubin NVL144 rack design features 45°C (113°F) liquid cooling, a new liquid-cooled busbar for higher performance, and 20x more energy storage to keep power steady. A central printed circuit board midplane replaces traditional cable-based connections for faster assembly and serviceability

Kyber - the successor to Nvidia Oberon - will house 576 Rubin Ultra GPUs by 2027. The system features 18 compute blades rotated vertically, “like books on a shelf.”

Silicon providers for Nvidia's move to 800VDC include Analog Devices, AOS, EPC, Infineon, Innoscience, MPS, Navitas, onsemi, Power Integrations, Renesas, Richtek, ROHM, STMicroelectronics, and Texas Instruments.

Several of the chip providers noted that 800VDC requires a change in power chips and power supply units (PSUs), with many touting the capabilities of gallium nitride.

“This paradigm shift requires advanced power semiconductors, particularly silicon carbide (SiC) and gallium nitride (GaN), to handle the higher voltages and frequencies with maximum efficiency,” AOE said.

Power system component providers in on the project include BizLink, Delta, Flex, GE Vernova, Lead Wealth, LITEON, and Megmeet. The power system providers debuted new power shelves, liquid-cooled busbars, energy storage systems, and interconnects to meet the demands of the new Nvidia systems. Data center power system providers include ABB, Eaton, GE Vernova, Heron Power, Hitachi Energy, Mitsubishi Electric, Schneider Electric, Siemens, and Vertiv.

A number of vendors have announced new reference designs for 800VDC architecture that will allow operators to prepare for 1MW racks in the future. A number of operators are looking at providing ‘side-car’ racks that sit on either side of compute racks, providing the required power and cooling.

“The move to 800VDC is a natural evolution as compute density increases, and Schneider Electric is committed to helping customers make that transition safely and reliably,” said Jim Simonelli, CTO, data centers, Schneider Electric. “Our expertise lies in understanding the full power ecosystem, from grid to server, and designing solutions that integrate seamlessly, perform predictably, and operate safely.”

Understandably, Simonelli is ecstatic by the transformation: it means the AI space will need to order millions if not billions in new equipment from his company as AI's first major upgrade cycle kicks in. Others, those who have to pay it, will be far less happy.

It's why in a recent research note from Goldman (available here to professional subs), the bank's datacenter analyst Daniela Costa writes that recent investor debate around data center capex has shifted to the 800VDC architecture proposed by NVIDIA and who will be winners and losers among the capital goods sector, and of course what the bill will be.

According to Costa, the adoption of 800VDC architecture in AI data centres represents a fundamental shift driven by escalating power demands and operational challenges of Artificial Intelligence workloads. The primary reason for this transition is to efficiently support the unprecedented power density required by modern AI racks, which are scaling from tens of kilowatts to well over a megawatt per rack, exceeding the capabilities of traditional 54V or 415/480VAC systems.

NVIDIA projects that in the long run, its 800VDC architecture can reduce Total Cost of Ownership (TCO) by up to 30% due to improvements in efficiency, reliability, and system architecture, which is also linked to lower equipment maintenance costs (of course, in the short run it will represent another major spending hurdle). NVIDIA expects the transition to 800VDC data centres to coincide with the deployment of its Kyber rack architecture targeted for 2027.

There are wide ranges across the industry on the share of data centres that could operate with NVIDIA VDC800 from 2028 onwards. For example, at Goldman's 17th Industrials Week, Legrand said they expect the shift to higher voltages to drive higher revenue potential per MW (from €2m/MW in traditional DCs to potentially €3m/MW), although noting that racks above 300kW should remain niche even by 2030E, with 3/4 of racks still currently below 10kW. However, Goldman also recently hosted an expert call on AIDC Power where the expectation was mentioned that 800VDC architecture could become mainstream, for 80-90% of newly built DCs.

Regardless of the level of adoption, this technological shift means that budgets within some DCs could be allocated to meaningfully different product types vs today’s architectures, with possible implications for investors.

As a result, the purpose of Costa's is to educate investors on those implications, which, while unlikely to drive company forecasts over the next two years, will soon start having implications for valuation.

Below we excerpt from the Goldman report the bank's main observations on the upcoming key technological shifts and their impact

- Transition to 800VDC distribution: The 800VDC architecture fundamentally changes how power is delivered in AI data centres, making traditional AC PDU and AC UPS systems largely unnecessary. This type of infrastructure requires a streamlined power path that centralizes power conversion and integrates battery storage at a facility level. Instead of individual UPS units providing backup power to specific racks or sections, the 800VDC architecture incorporates facility-level battery storage systems. These large-scale battery systems manage power fluctuations, provide ride-through power during outages, and ensure grid stability for the entire data center. This reduces AC PDU cabinet requirements by up to 75% and enables MW-scale rack densities. According to management comments at Goldman's 17th Annual Industrial Week, ABB holds a 100% share in MV DC UPS systems at the moment, a segment expected to become increasingly important as data centers move to centralized backup systems at medium voltage, while Schneider, Legrand and ABB have exposure today to AC PDUs and AC UPS.

- Sidecars key to get started with existing data center retrofit: Sidecars will be deployed in the first VDC800 rollout phase (2025–2027) as transitional modules before full native DC halls. They attach to MW-class racks converting incoming AC to 800VDC and providing integrated short-duration energy storage to stabilize GPU load spikes. This design eliminates the need for traditional AC PDUs and UPS systems while enabling rack power to scale up to 1.2MW. Schneider is the key supplier of these sidecars, explicitly targeting racks at up to 1.2MW with integrated storage. These sidecars include DC-DC converters, which ABB also mentioned at our conference would see strong demand under this new architecture. Another key piece of equipment that NVIDIA believes will be present in the final form of 800VDC data centres to maximise efficiency are solid-state transformers, which are not currently produced by any of the players in Goldman's coverage, although Schneider and ABB have commented on actively innovating in this space.

- Reduction in copper content: Lower current requirements at 800V lead to up to 45% reduction in copper mass. A conductor of the same size can carry approximately 157% more power at 800VDC versus 415VAC, fundamentally altering cable and busway economics. This means a shift from four-wire AC to three-wire DC (POS/RTN/PE) systems, reducing conductor and connector complexity. Because less current flows for the same power, thinner cables and smaller busbars are needed - Prysmian, Nexans, Legrand and Schneider have important exposure to cables and busbars within DCs. While optimized, both cables and busways remain integral components of the 800VDC power distribution. Additionally, some companies state that while there is a lower volume requirement for such equipment, the quality required is higher, increasing ASP as content per MW rises as demand shifts to DC busways, DC switchgear, and specialised cables, particularly liquid cooled cables. While not used in data centre applications, Nexans produces liquid-cooled superconducting cables, Prysmian is developing DC-cooled solutions to meet requirements of fast charging, and NKT is working on superconducting cables. Additionally, ABB, Schneider and Siemens supply DC switchgear, and most electrical players supply DC busways.

- Decisive pivot to liquid cooling: As rack power scales from tens of kW to up to 1.2MW, this power density generates an unprecedented amount of heat, which traditional air-cooling systems are increasingly unable to dissipate efficiently. Air cooling systems fall short in handling the heat and increasing power needs of AI and modern computing. This drives a decisive pivot to liquid cooling with megawatt-class coolant distribution units. Schneider, through its Motivair assets, is the most exposed company in Goldman's coverage to liquid cooling, although Alfa Laval also has indirect exposure collaborating, with both liquid cooling solution providers like Vertiv, hyperscalers and contractors to be in the system. Carel also provides controllers, humidification systems and sensors used throughout the data center liquid cooling and traditional cooling systems.

- Redesigned protection and safety systems: Instead of distributing AC power through numerous AC switchboards, which are large assemblies of circuit breakers, switches, and control equipment, the 800VDC architecture streamlines this. In 800VDC architectures, these are being replaced by advanced DC safety breakers and solid-state protection devices. Solid-state circuit breakers are a key innovation in this area, offering superior speed and controllability compared to their mechanical counterparts. For instance, ABB's SACE Infinitus is noted as the world's first IEC-certified solid-state circuit breaker, specifically designed to make direct current distribution viable. Additionally, Schneider has commented on developing a solid-state switchgear, but it has not been commercialized yet.

- Increasing need for energy storage: The shift to 800VDC data centers demands greater battery storage to handle higher power volatility and bypass grid interconnection limits, given racks can surge from 30% to 100% utilization in milliseconds. Energy storage will evolve to an active “low-pass filter,” absorbing high-frequency spikes and smoothing load ramps. This supports layered strategies using fast-response capacitors for millisecond fluctuations closer to the rack and large BESS for longer ride-through closer to the interconnection. Goldman highlights Schneider as exposed to the move of energy storage closer to the racks.

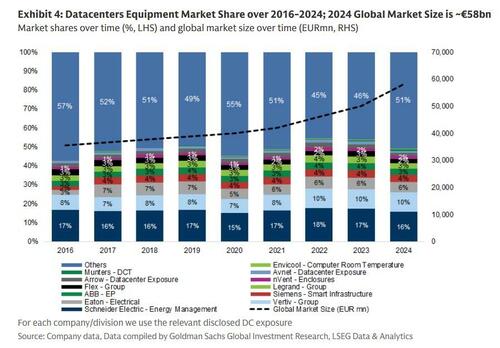

A visual summary of the data center equipment maker power share (for a far more detailed analysis, please see "Behind The $500 Billion Data Center Boom: Here's Who Makes All The Key Components").

In parting, the Goldman analyst notes this is a trend with rather unclear but certain impact on the bank's coverage; nonetheless the emergence of such technology will take time, and the bank expects to start seeing commercialization towards 2028.

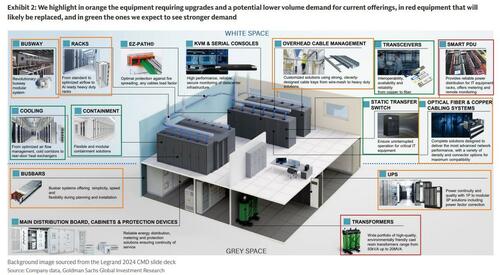

We conclude with a few charts starting with a visual snapshot of what will and won't have to be replaced in the coming years alongside the transition to 800 VDC: in orange, you will find the equipment requiring upgrades and a potential lower volume demand for current offerings, in red is equipment that will likely be replaced, and in green are the ones expected to see stronger demand.

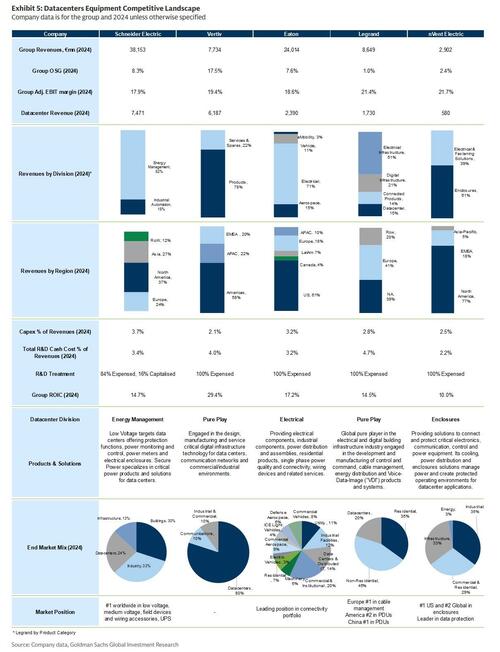

Next, a summarized product exposure by company.

Finally, a summary of the datacenter equipment competitive landscape.

Much more in the full Goldman note available to pro subscribers.