From 'Straw Man' To 'Steel Man', Goldman Lays Out The 7 Software Bear-Case Debates

Relative to prior Software sector corrections, the 2026 YTD one is squarely anchored to terminal value debates: instead of analyzing a change in near-term demand trendline or the mathematical impact of higher interest rates, the market is questioning software moats and business models.

While this debate is centered primarily on the application software companies; to a lesser extent, investors are also questioning whether the infrastructure/security stack could be impacted, and the ROI tied to hyperscaler capex.

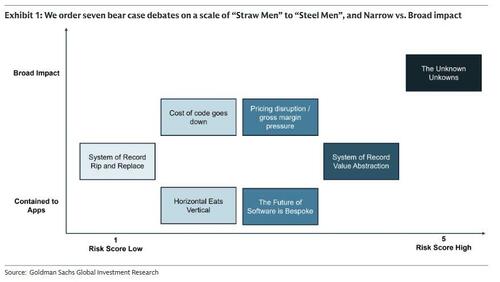

In this context, Goldman Sachs Technology Research team dropped a must read note "Revisiting Moats Part I: Exploring AI Steel Man Arguments" offering their thoughts on the seven primary bear cases they hear on Software, roughly order each bear case via a risk score of 1 (low) to 5 (high), and arrange on an axis of narrowly defined to app software vs. broadly defined...

In the full 28-page note (which pro subs can read in full here), Goldman lays in detail the case for each debate. Below we summarize each with a risk score and Goldman's view:

1. System of Record “rip and replace” - risk score 1

The bear case: New competition comes up with new ways to solve the system of record problem for storing foundational business data, catalyzing a “rip and replace” movement making today’s SoR-centric software companies obsolete.

GS View: We see relatively low risk of this happening because GenAI is designed to be an analytical and generative engine, not a transactional engine, and assign a risk score of “1”. Being able to underwrite core systems of record staying intact (even in a world where they enable a new set of competition or where parts of their stack like the user interface are disintermediated) allows us to underwrite a terminal value that is not zero, but rather closer to where most SoR companies are currently trading.

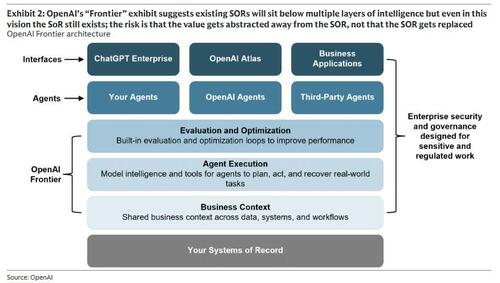

Key Question: What is the value of the System of Record within the stack? If Exhibit 2 holds true in a reasonable bear case scenario, the value of app software within the technology stack will effectively compress to the value of the System of Record, while the profit pools beyond the SoR could largely be captured by new competition.

Existing software incumbents today will become intelligent data storage layers - but even then, these layers do not have a terminal value of zero and thus can help underwrite valuation support.

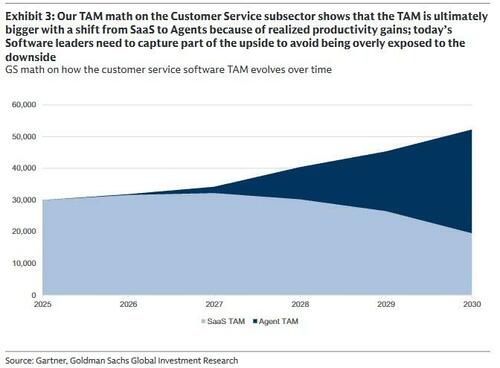

2. Value accrues away from Systems of Record and towards the Agentic Operating System - risk score 4

The bear case: New AI tools sit on top of the existing Software stack and capture incremental value. While SoRs remain necessary for data storage and compliance, they increasingly function as infrastructure rather than primary value creators. Today’s application software leaders have limited exposure to growth and outsized exposure to seat count reductions or commoditization.

GS View: The risk from AI in our view is less on rip and replace, but more on value abstraction. The biggest advantage that incumbents have is their domain experience and context. We expect select incumbents will be able to prove to customers that this domain experience drives better AI outcomes, provided they have been thoughtful about cleaning up tech debt/keeping their stack dynamic and have innovated as fast followers. The incumbents are not static: many have world class engineering teams that benefit from the same AI productivity gains as start ups, founder involvement, and strong cash balances to respond to new competitive threats. However, not all companies are well positioned to execute in a period of intensifying competition, and we assign this bear case a risk score of “4”.

3. Horizontal eats Vertical - risk score 2

The bear case: Horizontal providers will use their AI-powered tooling to give customers the ability to build vertical-specific workflow within horizontal platforms. The long-term sustainability of the competitive moats that have historically driven significant pricing power for vertical software incumbents comes into question.

GS View: We continue to believe that the characteristics of vertical software, and their respective end-markets, position these businesses to benefit from increasing adoption of AI-technologies, and we see relatively low risk to the sustainability of these competitive moats – assigning a risk score of “2”.

4. Lower cost of code - risk score 2

The bear case: AI coding tools allow developers to be more productive and enable a wider group of people to develop software, reducing the cost of software development and the barrier to entry.

GS View: We agree that the cost of code is a key input to the cost of building a software application, and that the cost of producing code is coming down, which will lead to new entrants. However, we currently feel more comfortable about the risk here as there is a substantial difference between coding a product and developing that product into a company: there is more to a successful software company than simply the cost of code. We assign a risk score of “2”.

5. The future of software is bespoke - risk score 3

The bear case: As the cost of code goes down, more enterprises will choose to build their own apps that are tailored to specific workflows, data, and objectives, instead of buying software from software vendors. This opportunity either accrues to the infrastructure software vendors (such as Databricks and Snowflake), LLM model providers, or Palantir.

GS View: We do not believe the cost of code coming down changes the calculus universally on build vs. buy, although we do expect to see some share capture by custom software in the enterprise. In our view, this is less a question about vibe coding and more a question on internal IT choosing to build enterprise-grade software in house because they believe they can do so at lower cost for better performance. This distills down to the classic question of insourcing vs. outsourcing: while the cost of building the software may go down, the cost and responsibility of maintaining it (even with agentic efficiencies) will still compound over time. Even in a scenario where the cost of maintaining software applications internally goes down, it will also go down at the specialized providers, such that the performance to cost frontier at the specialized provider will generally always be ahead of the in house technology. We see the most likely custom software share capture in the space that falls between typical systems of record (back office vs. front office, and requires coordination across multiple business units or teams that may not have been otherwise connected by packaged software). We assign a risk score of “3”.

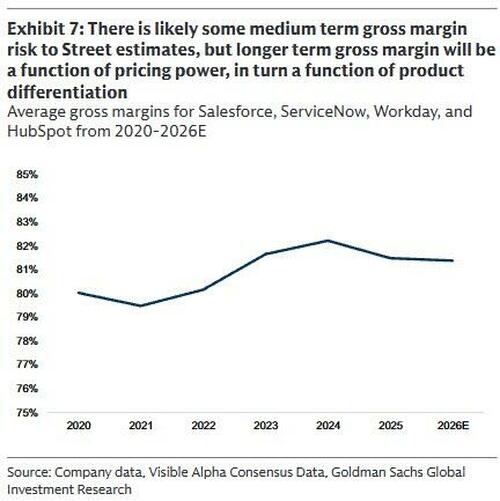

6. Gross margin pressure & the “LLM Tax” - risk score 3

The bear case: Software’s high gross margins (70–90%) are structurally unsustainable in an AI‑centric landscape. As competition goes up, new entrants may deliver comparable or superior outcomes with lower company cost structures (having started with a clean sheet of paper and no incumbent profit pool to protect). At the same time, the marginal cost of production at existing providers goes up from 10-20% (the cost of CPU-based hosting) to something greater (the cost of GPU-based inference).

GS View: We expect a 12-24 month period of modest gross margin pressure in aggregate, as many companies will focus on customer adoption before monetization and choose to absorb the cost of GPU inference and LLM APIs. However, for the industry, inference costs will likely continue to decline over time relative to the value/amount of associated productivity of AI. For any specific company, gross margin is ultimately a function of pricing power, which in turn is a function of product differentiation (where incumbents could have an edge if they convert their domain experience into higher quality outcomes). Our investment process will favor those companies that can show product differentiation. Given uneven outcomes across companies, we assign a risk score of “3”.

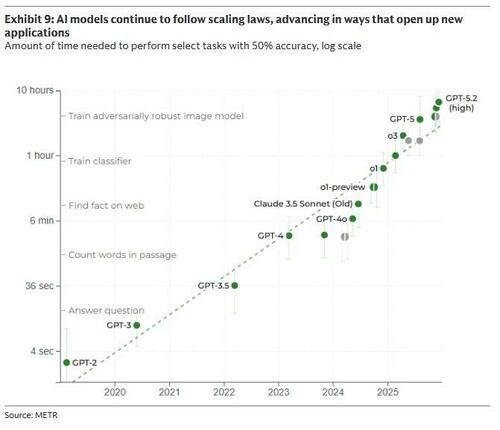

7. The pace of innovation increases uncertainty - risk score 5

The bear case: The technology is rapidly changing, meaning the end state is difficult if not impossible to predict. Scaling laws still hold. YTD, there have been notable updates from Anthropic (Cowork, Opus 4.6, vertical plug ins), OpenAI (Frontier, OpenClaw), Google DeepMind (Deep Think) and Meta (Avocado). This creates substantial uncertainty about future knowledge work, the economic structure of the Software industry, and the terminal value of the companies - and uncertainty is a low multiple business.

GS View: This risk is the hardest to plan for, and our due diligence continues to include leading edge AI startups and AI academics. However, uncertainty could also surface new market opportunities: consider the potential for TAM creation in medical diagnostics or energy, as Microsoft discusses in its superintelligence blog. We assign a risk score of 5.

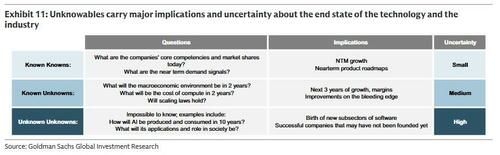

Unknown unknowns

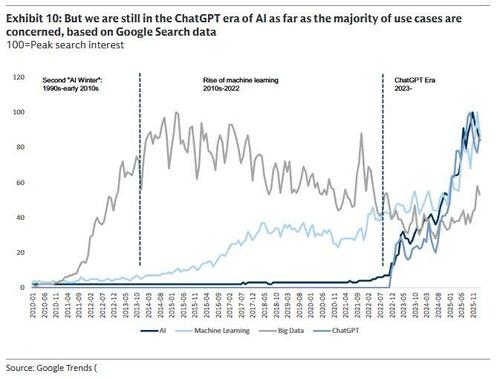

Some technological problems are known to us now, and wen can speculate as to their evolutions. For example, scaling and efficiency laws can help us make predictions about model capabilities or token usage 1-2 years out, and there is already discussion in the academic world as to whether LLMs can truly make progress toward AGI and alternative neural network approaches. These are the known unknowns. But not all advances are immediately obvious; there will be breakthroughs that fundamentally alter what is possible, and consequently the end state of the industry. We are still arguably living in the ChatGPT era, where for most people AI means interactions with intelligent chatbots (Exhibit 10).

This could change in ways that are impossible to predict, which in turn would have implications for the companies the technology touches. An observer in 1993 would have found it difficult to predict what Web 2.0 would look like, and few observers in December 2022 might have predicted Claude Cowork/Clawdbot and their impacts on the industry narrative.

Such unknown unknowns make it impossible to accurately predict the future state of artificial intelligence. This does not mean that negative outcomes are inevitable (or even likely), but it does make them harder to rule out in the near term. This drives uncertainty.

* * *

Goldman's goal is to revisit this topic of moats over the next several months to better understand barrier to entry, product differentiation and pricing power. They conclude that their key observation over the past four weeks has been how quickly the agentic technology ecosystem is evolving, which makes it challenging to assess terminal values and thus put a floor to valuations.

Nonetheless, Goldman offers signals to watch for stabilization in their note here.

Professional subscribers can read the full note "Revisiting Moats Part I: Exploring AI Steel Men Arguments" here at our new Marketdesk.ai portal