CME Black-Friday Blackout Was Human Error

TL;DR – CME Data Center Outage

-

Cooling system errors at CyrusOne began 12 hours before markets were disrupted, with procedural failures during cold-weather preparation causing overheating and total chiller shutdown.

-

Severity assessments fluctuated throughout the day, delaying full recognition of the scale of the problem and limiting timely market communication.

-

CME did not activate its New York disaster-recovery site, assuming the outage would be brief and seeking to avoid migration risks and data-integrity issues.

-

The halt interrupted global futures trading across metals, energy, rates, and FX, exposing CME’s operational dependence on a single leased data-center node

CME Cooling Failure and Derivatives Market Disruption

Cooling failures at CME’s primary data center began 12 hours before global trading halted, with procedural errors and shifting severity assessments delaying response. The exchange declined to activate its disaster backup site, resulting in widespread disruption across metals, energy, rates, and FX markets, highlighting concentrated dependence on a single external infrastructure provider.

I. Incident Overview

In late November 2025, CME Group suffered a technology failure at its primary data center in suburban Chicago, interrupting global derivatives trading across asset classes. The outage originated at CyrusOne, the private-equity-owned data center operator providing infrastructure support to CME under a long-term lease arrangement.

Breaking: Massive CME Outage Jolts Markets

GFN – CHICAGO: CME Group halted trading across its futures and options markets late Thursday after a data-center cooling failure disrupted the CME Globex platform, interrupting live pricing in commodities, Treasuries, equity-index futures and foreign exchange during early Asian trading.

Bloomberg reviewed a confidential 11-page root-cause report indicating that technical difficulties began approximately twelve hours before markets were affected, yet the full scale and persistence of the problem were not immediately recognized by market participants or exchange operators.

“The owner of the data center that serves CME Group Inc. started experiencing technical problems about 12 hours before an outage in its cooling system took down global markets.”

The disruptions ultimately halted trading activity from Tokyo through London and the United States, affecting contracts spanning precious metals, energy, interest rates, and currencies.

II. Pre-Outage Timeline

CyrusOne notified CME of emerging cooling system issues at 4:19 a.m. Central Time on November 27, during the US Thanksgiving holiday. The firm later sent a follow-up text message to clients at 10:19 a.m. CT confirming awareness of the malfunction.

Despite those communications, broader market participants remained unaware of the situation until trading was unexpectedly halted during Asian hours later that day.

A detailed internal timeline shows repeated adjustments to the reported severity of the incident over the course of the day:

-

Initial detection and notification: 4:19 a.m. CT

-

Severity downgraded: roughly two hours after initial escalation

-

Severity upgraded to higher risk: 12:13 p.m. CT

-

Escalation to Level 1 severity: 4:10 p.m. CT, just ahead of the Asian trading session

During this sequence, the scope of the cooling failure remained unclear even internally, leading to inconsistent assessments of whether the problem would be brief or prolonged.

“During the outage, CyrusOne alternately lowered and raised the severity rating of the incident before the market opened for Asian trading.”

III. Mechanical Cause

The report traced the incident to process failures during a transition of cooling towers into cold-weather operations one day before the outage.

At 3:40 a.m. on November 27, errors during procedural changes resulted in overheated systems. The report indicated that these steps did not follow standard operating protocols, leading to cascading mechanical failure rather than stabilization.

Contributing errors included improper draining procedures undertaken by onsite staff and contractors.

“Onsite staff and contractors failed to follow standard procedures for draining the cooling towers.”

Attempts to remediate the initial overheating inadvertently worsened the problem. By 6:19 p.m. CT, all chillers servicing the facility were offline or in faulty condition, fully compromising temperature control inside the data center.

CyrusOne publicly attributed the incident to human error, a characterization acknowledged by CME in its own December 6 statement, which added that the operator’s “initial remediation attempts further exacerbated the problem.”

IV. Disaster Recovery Decision Process

CME maintains a disaster recovery framework designed to transition operations to a backup data center located in the New York region during major outages. However, the exchange chose not to activate that contingency during the November incident.

Bloomberg cited the confidential analysis indicating that CME’s decision not to switch facilities was based on an early assumption that the outage would be brief and quickly resolved.

Emergency data center transfers are uncommon across financial infrastructure operators, due both to:

-

Risks of data inconsistencies or synchronization errors during transition.

-

The possibility of software bugs or mismatches which may not be apparent during ordinary testing.

-

Latency advantages enjoyed by firms with equipment colocated near the primary facility.

Market-making firms prefer physical proximity to exchange servers to minimize delays in order information transmission, providing small but measurable trading advantages.

V. Market Impact

CME’s Chicago facility processes derivatives trades measured in trillions of dollars daily, covering:

-

Precious metals (including gold)

-

Energy contracts

-

Interest rate derivatives

-

Currency futures

-

Equity-linked derivatives

The outage suspended trading during overlapping Asian and European hours, preventing price discovery and liquidity provision across multiple markets.

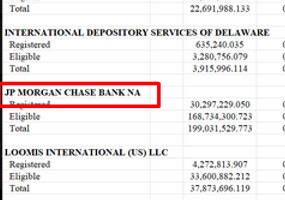

JPM Reportedly Made 13.4 Million Ounces Unavailable During CME Shutdown

On Friday, November 28, 2025, the CME Group’s daily Metal Depository Statistics report (covering activity dated November 26, 2025, and published on November 28) showed no physical withdrawals of silver from COMEX warehouses.

Instead, the significant decline in registered silver inventory—approximately 12 million ounces—was entirely attributed to internal reclassification adjustments rather than actual physical removals. Read full story

The operational disruption extended beyond CME itself. CyrusOne was forced to suspend a $1.3 billion mortgage-bond sale being arranged by Goldman Sachs due to the facility’s technological instability.

When service resumed, trading was restored gradually. CME’s Globex Futures & Options platform, representing approximately 90 percent of CME’s transaction volume, reopened at 7:30 a.m. Chicago time on November 28.

Continues here