GPUs vs. ASICs and The Inference Cost Curve

From the TightSpreads Substack.

By Goldman’s James Schieder

In response to investor questions on the GPUs vs. ASIC debate, we have attempted to quantify performance/cost dynamics by building an “inference cost curve” to compare solutions from different chip vendors and their competitiveness over time. We share our thoughts on the ongoing debate around competition between merchant solutions (Nvidia & AMD) and custom ASIC solutions (TPU & Trainium), and lay out four potential future scenarios for the evolution of AI.

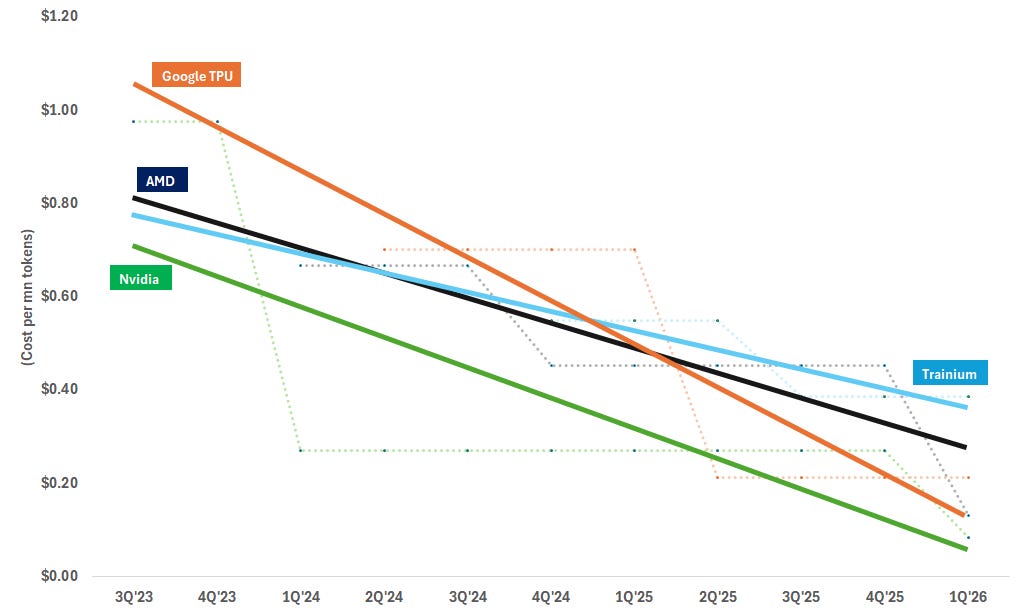

Our analysis suggests that Google/Broadcom’s TPU is rapidly closing the gap with Nvidia’s GPU solutions on compute cost performance, while Trainium and AMD are lagging. Our analysis suggests a ~70% reduction in cost per token from TPU v6 to TPU v7, on par/slightly better with Nvidia’s GB200 NVL72 in terms of absolute cost. However, we believe Nvidia remains ahead on “time to market,” and its CUDA software remains a key moat for enterprise customers. In comparison, we estimate Amazon’s Trainium and AMD solutions have delivered ~30% cost reduction and lag Nvidia and Google solutions on absolute cost. In late 2026, we expect AMD’s rack level solutions to be more competitive with Nvidia’s Rubin and Google’s TPU in terms of compute performance. Since AWS re:Invent, our checks suggest that Trainium 3&4 may offer stronger performance that rectifies some of Trainium 2’s challenges - and we will continue to monitor developments on this front.

We view advancements in networking, memory and packaging technologies as critical to driving further cost reductions going forward. With compute dies already at reticle limits, we believe continued cost reductions will be driven by advancements in networking, memory and packaging technologies.

Caveat: Our analysis is based on a snapshot in time of today’s compute performance and does not fully contemplate software optimization or uplifts from networking, memory and packaging advancements. Our methodology is focused on accelerator compute performance and does not fully contemplate performance uplift from new networking, memory or packaging.

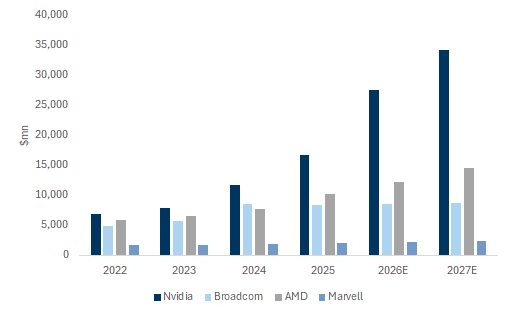

We continue to prefer Broadcom and Nvidia within the compute ecosystem, as we view them as tied to the most sustainable elements of AI CapEx and as the biggest beneficiaries of advancements in networking technologies. We could be more constructive on AMD if we see on-time OpenAI deployments driving fundamentals in 2H26 and/or see new hyperscaler customer wins.

AI Economics Our thoughts on the GPU vs. ASIC debate

Exhibit 1: Inference cost per million tokens ($)

Source: Company data, Goldman Sachs Global Investment Research

The Bottom Line: We believe Google’s TPU has made significant inroads on raw compute performance to compete closely with Nvidia’s GPUs for inference applications; AMD and Amazon’s Trainium currently lag these market leaders. We believe Nvidia retains its time to market advantage - and this, plus its “CUDA moat” should enable it to maintain leadership in the accelerator market for now given its rapid pace of innovation in the near term. We expect Google/Broadcom TPUs to gain share for Google’s internal workloads, and for customers (such as Anthropic) who have the internal software talent to develop on TPU/ASIC platforms. Longer term, we see a growing presence of custom ASICs for internal workloads, and expect hyperscalers to selectively adapt their internal offerings for use by external customers. As raw compute performance reaches phyiscal limits (and accelerators are already reticle limited), we expect further performance and cost improvements to be driven by innovation across networking, memory, and packaging. We see Nvidia and Broadcom as best positioned to leverage developments in these areas. Nvidia is significantly outspending competitors on R&D, has a strong positioning in networking with its Mellanox business, and has started to also deliver innovations in memory technology with its context memory storage controller offering. Broadcom also continues to lead the market with its best-in-class processor/accelerator solutions plus its industry-leading ethernet networking and SERDES capabilities.

The key conclusions from our analysis are as follows:

-

TPUs (from Google/Broadcom) are rapidly closing the gap with Nvidia’s merchant solutions based on raw compute cost performance. Our inference cost curve analysis shows strong generation-to-generation cost reductions for inference applications using Google/Broadcom TPUs (~70% reduction from TPU v6 to TPU v7), bringing the cost per million tokens on par or below Nvidia’s solutions (GB200 NVL72). This is directionally consistent with Google’s increased usage of TPUs for their internal workloads (including training its Gemini models), and increasing usage at customers with strong software capabilities, most notably at Anthropic (here) which has placed orders worth $21bn with Broadcom (shipments expected in mid-2026).

-

Cost reductions are less steep for AMD and Amazon, although we expect AMD’s MI455X rack and Trainium 3/4 to potentially be more competitive with Nvidia and Google. Based on our analysis - which is primarily driven by raw compute performance - we believe that AMD and Amazon currently lag behind Nvidia and Google TPUs in terms of both generation-to-generation cost reductions and absolute inference cost. Although these solutions can be further optimized for certain workloads, we view the threat to Nvidia from current AMD and Amazon solutions as relatively low at this stage. On this front, we believe AMD’s rack-level solutions could be more cost competitive, with AMD claiming that its rack-level solution will be on par with Nvidia’s VR200 for training and inference. Since AWS re:Invent, our checks suggest that Trainium 3&4 may offer stronger performance that rectifies some of Trainium 2’s challenges - and we will continue to monitor developments on this front.

-

Networking, memory and packaging technologies are the new bottlenecks to AI compute - and are increasingly important to drive cost reduction. As compute dies are already pushing reticle limits, we expect advancements in compute-adjacent technologies - namely networking, memory and advanced packaging - to be increasingly critical to deliver performance and lower cost. We have seen many examples of this innovation in adjacent technologies such as (1) introduction of scale-up and scale-across ethernet to increase the system bandwidth and connect more GPUs to act as a single unit; (2) continued integration of High-Bandwidth Memory (HBM) and NAND flash solutions to increase both training and inference performance - as per Nvidia’s recent announcement of its context memory storage controller; (3) TSMC’s latest Chip-on-Wafer-on-Substrate (CoWoS) technology to integrate two GPU dies in a single package (Nvidia’s Blackwell and four GPU dies as is expected for Nvidia’s Rubin-Ultra in 2027); (4) increasing densification of rack-level solutions (multiple GPUs acting together with Nvidia, and similar solutions from AMD and ASICs); (5) introduction of co-packaged optics (CPO).

-

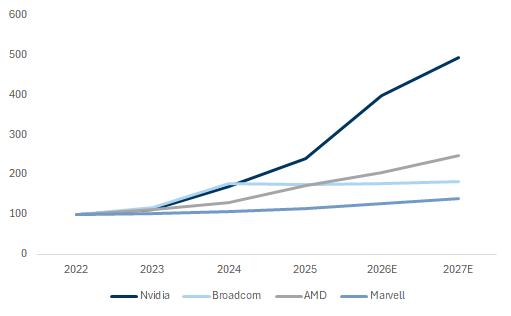

We expect Nvidia to remain ahead of competitive solutions in the near term as the industry continues to move at a rapid pace. As AI workloads and models continue to move at a rapid pace with more than a few vectors of growth still ahead of us (physical AI being the next industry demand driver in our view), we expect Nvidia to sustain its lead in the accelerator market driven by a combination of innovation across the full datacenter stack (hardware plus software). Although our cost analysis suggests that Google/Broadcom’s TPU v7 is on par with GB200 NVL72 on a cost-per-token basis, we note that Nvidia’s time to market remains fastest with an annual product launch cadence - and further performance improvements are expected with GB300 NVL72 (already shipping) and VR200 NVL144 (on track for 2H26 shipments). We believe this edge should help Nvidia to maintain its lead at many key customers, including hyperscalers outside of Google - where ASIC development efforts have progressed more slowly.

Exhibit 2: Nvidia is expected to spend more on OpEx in 2026/27 than its competitors combined

Source: Company data, Goldman Sachs Global Investment Research

Exhibit 3: Despite a much larger base and leadership position in accelerators, Nvidia is growing its OpEx at a much faster pace than its competitors

OpEx (indexed to 100)

Source: Company data, Goldman Sachs Global Investment Research

-

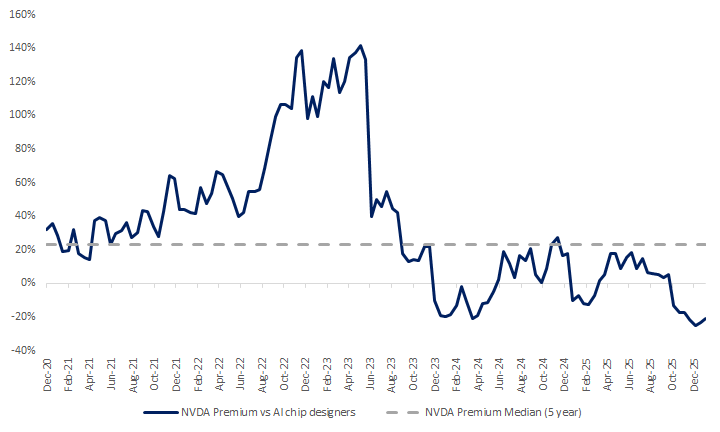

We believe structural questions on the sustainability of Nvidia’s competitive moat continue to weigh on the stock’s multiple. We believe dramatic cost reduction for inference applications is imperative for broader adoption and long-term profitability of the AI ecosystem. Given ASICs (especially TPUs) are already achieving significant cost reductions and converging toward parity with Nvidia solutions, Nvidia’s gross margins remain well ahead of competitors, and hyperscaler CapEx remains elevated with core LLM profitability still elusive, Nvidia’s stock multiple is under pressure. For context, NVDA has traded at a 25% discount to median AI-chip peers over the past two years vs. a 20% premium over a five-year lookback. For Nvidia’s stock to narrow that discount range or trade at a premium again, we believe we would have to see some combination of (1) LLM performance benchmarks of models trained on Blackwell which show a compelling performance step-up (as early as March 2026); (2) meaningfully lower cost per token for Nvidia vs. competing solutions; (3) monetization of AI in enterprise use cases.

Exhibit 4: Nvidia currently trades at a ~25% discount to median AI chip designer stock, vs 5 year median of +20% premium on NTM P/E basis

AI chip designers include AVGO, AMD and MRVL

Source: FactSet

-

We are closely watching the AI software development from hyperscalers and AMD. Even as competitors continue to enhance their hardware stacks to drive hardware performance, we believe CUDA and a rich developer ecosystem is a key factor for Nvidia’s maintain its competitive edge - as the ability to move workloads to competing solutions remains relatively limited - especially among enterprise customers.

-

In the long run, we see GPU vs. ASIC debate developing along one of four potential scenarios. However, we see ASICs benefiting in all these scenarios to varying degrees.

-

Scenario #1: Limited traction in enterprise and consumer AI. If mass adoption of AI remains limited to a handful of use cases (coding, back-office process automation, etc.), we could see moderating CapEx levels across the industry. Although this would be a broadly negative outcome for both GPU and ASIC solutions, the lack of application evolution would mean faster ASIC adoption in our view.

-

Scenario #2: Continuing scaling of consumer AI, but limited traction for enterprise AI. In this scenario, we believe the most important thing from Nvidia’s perspective would be its continued dominance in the training market, as workloads could become more static and concentrated among hyperscaler use cases - which we expect would drive more ASIC adoption.

-

Scenario #3: Continuing scaling of consumer AI, and moderate traction for enterprise AI. Similar to scenario #1, the most important thing for Nvidia would be its continued dominance in the training market. However, we would expect Nvidia to benefit from more incremental revenue opportunities (at very strong market share) for enterprise customers.

-

Scenario #4: Strong scaling of both consumer and enterprise across an increasing set of use cases. In this most bullish scenario, we would expect LLM providers and startups to eventually become profitable while training intensity remains high given the expansion of use cases across multimedia models, physical AI, and other applications. We believe this is most beneficial scenario for Nvidia given its dominance in the training market - and this would further bolster its “time-to-market” and CUDA moat. We also expect ASICs to also benefit as an increasing number of workloads achieve scale, but these would likely gain market share at a slower rate.

-

Stock Implications and Estimate Changes

Nvidia (Buy): We expect Nvidia to maintain its competitive lead in the accelerator market in the medium term, as AI models and software continues to evolve at a rapid pace. We expect near-term strength in Nvidia’s fundamentals driven by continued demand among hyperscalers and non-traditional customers to drive upside to Street estimates. With the stock trading at a discount to peers and our NTM EPS estimate 18% above VA consensus, we see a positive risk/reward skew and expect the stock to outperform our broader Digital semis complex over the next 12 months. We see hyperscaler earnings in late-January and potential Blackwell-trained model releases from xAI and/or OpenAI as near-term catalysts for the stock.

Price target methodology and risks

We are Buy rated on NVDA. Our 12-month price target of $250 is based on a 30X P/E multiple applied to our normalized EPS estimate of $8.25. Key downside risks include: (1) slowdown in AI infrastructure spending, (2) share erosion due to increased competitive intensity, (3) margin erosion due to increased competition; (4) supply constraints.

Broadcom (Buy, on the CL): As noted above, TPUs have narrowed the gap with Nvidia’s GB200 solutions on a cost per token basis, and we expect increasing use of TPUs at Google and increased efforts to broader their usage at external customers. We see Broadcom as a key beneficiary of this trend, coupled with its industry leading ethernet networking capabilities. Following our recent Asia trip (here), we believe the market is underestimating the level of upside to AI Networking for Broadcom and expect positive revisions to Street estimates over the next few quarters. We raise our estimates in this note and given our updated FY26 EPS estimate stand 6% above VA consensus, we view the recent weakness in the stock as a strong buying opportunity.

Exhibit 5: AVGO - New vs. Old estimates

The rest of this article is available to Premium Subscribers.